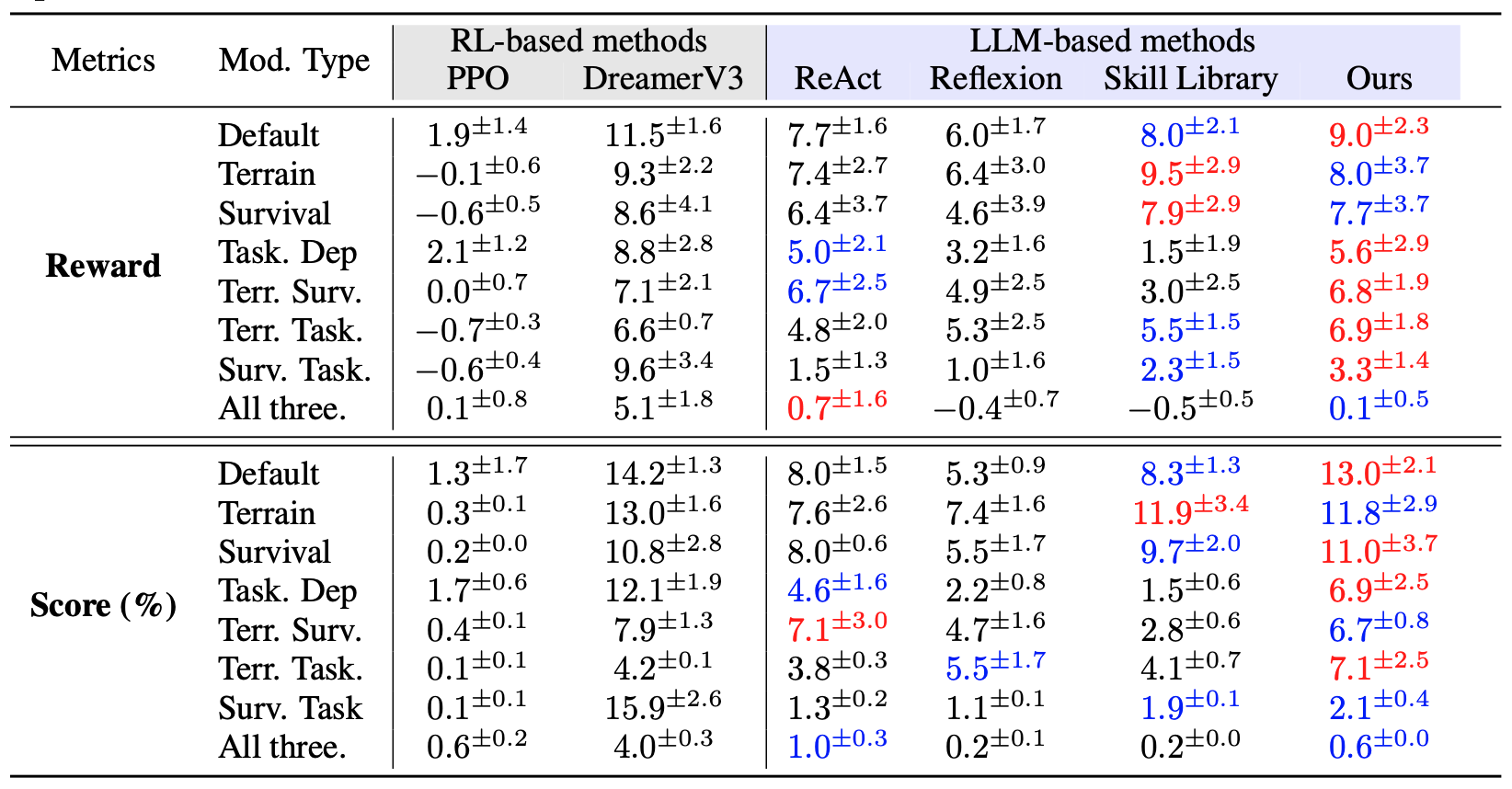

Reward on the 8 different worlds setting of  Mars. Results for LM models are summarized over 9 independent trials while RL methods over 20 independent trials.

Mars. Results for LM models are summarized over 9 independent trials while RL methods over 20 independent trials.

| # | Method | Model | Source | ALL (Excl. Default) | Default | Terrain | Survival | Task. Dep | Terr. Surv. | Terr. Task. | Surv. Task. | All Three. |

| 1 | IfR 🥇 | GPT-4-0125-preview | Link | 5.5 | 9.0\(^{\pm 2.3}\) | 8.0\(^{\pm 3.7}\) | 7.7\(^{\pm 3.7}\) | 5.6\(^{\pm 2.9}\) | 6.8\(^{\pm 1.9}\) | 6.9\(^{\pm 1.8}\) | 3.3\(^{\pm 1.4}\) | 0.1\(^{\pm 0.5}\) | 2 | ReAct 🥈 | GPT-4-0125-preview | Link | 4.6 | 7.7\(^{\pm 1.6}\) | 7.4\(^{\pm 2.7}\) | 6.4\(^{\pm 3.7}\) | 5.0\(^{\pm 2.1}\) | 6.7\(^{\pm 2.5}\) | 4.8\(^{\pm 2.0}\) | 1.5\(^{\pm 1.3}\) | 0.7\(^{\pm 1.6}\) |

| 3 | Skill Library 🥉 | GPT-4-0125-preview | Link | 4.2 | 8.0\(^{\pm 2.1}\) | 9.5\(^{\pm 2.9}\) | 7.9\(^{\pm 2.9}\) | 1.5\(^{\pm 1.9}\) | 3.0\(^{\pm 2.5}\) | 5.5\(^{\pm 1.5}\) | 2.3\(^{\pm 1.5}\) | -0.5\(^{\pm 0.5}\) |

| 4 | Reflexion | GPT-4-0125-preview | Link | 3.6 | 6.0\(^{\pm 1.7}\) | 6.4\(^{\pm 3.0}\) | 4.6\(^{\pm 3.9}\) | 3.2\(^{\pm 1.6}\) | 4.9\(^{\pm 2.5}\) | 5.3\(^{\pm 2.5}\) | 1.0\(^{\pm 1.6}\) | -0.4\(^{\pm 0.7}\) |

| 5 | IfR | LLaMA-3.1-8B-instruct | Link | 2.9 | 3.8\(^{\pm 2.4}\) | 3.8\(^{\pm 2.1}\) | 3.7\(^{\pm 2.8}\) | 2.9\(^{\pm 1.0}\) | 3.8\(^{\pm 2.0}\) | 3.3\(^{\pm 1.2}\) | 1.1\(^{\pm 1.3}\) | 0.8\(^{\pm 1.4}\) |

| 6 | ReAct | LLaMA-3.1-8B-instruct | Link | 1.7 | 3.6\(^{\pm 2.1}\) | 2.1\(^{\pm 2.2}\) | 2.3\(^{\pm 2.5}\) | 2.3\(^{\pm 1.0}\) | 1.1\(^{\pm 1.4}\) | 3.0\(^{\pm 1.6}\) | 0.7\(^{\pm 2.0}\) | 0.2\(^{\pm 1.2}\) |

| * | PPO | RL-based* | Link | 0.0 | 1.9\(^{\pm 1.4}\) | -0.1\(^{\pm 0.6}\) | -0.6\(^{\pm 0.5}\) | 2.1\(^{\pm 1.2}\) | 0.0\(^{\pm 0.7}\) | -0.7\(^{\pm 0.3}\) | -0.6\(^{\pm 0.4}\) | 0.1\(^{\pm 0.8}\) |

| * | DreamerV3 | RL-based* | Link | 7.9 | 11.5\(^{\pm 1.6}\) | 9.3\(^{\pm 2.2}\) | 8.6\(^{\pm 4.1}\) | 8.8\(^{\pm 2.8}\) | 7.1\(^{\pm 2.1}\) | 6.6\(^{\pm 0.7}\) | 9.6\(^{\pm 3.4}\) | 5.1\(^{\pm 1.8}\) |

ALL (Excl. Default): Average reward of all worlds except Default, i.e., overall performance of counter-commonsense worlds.

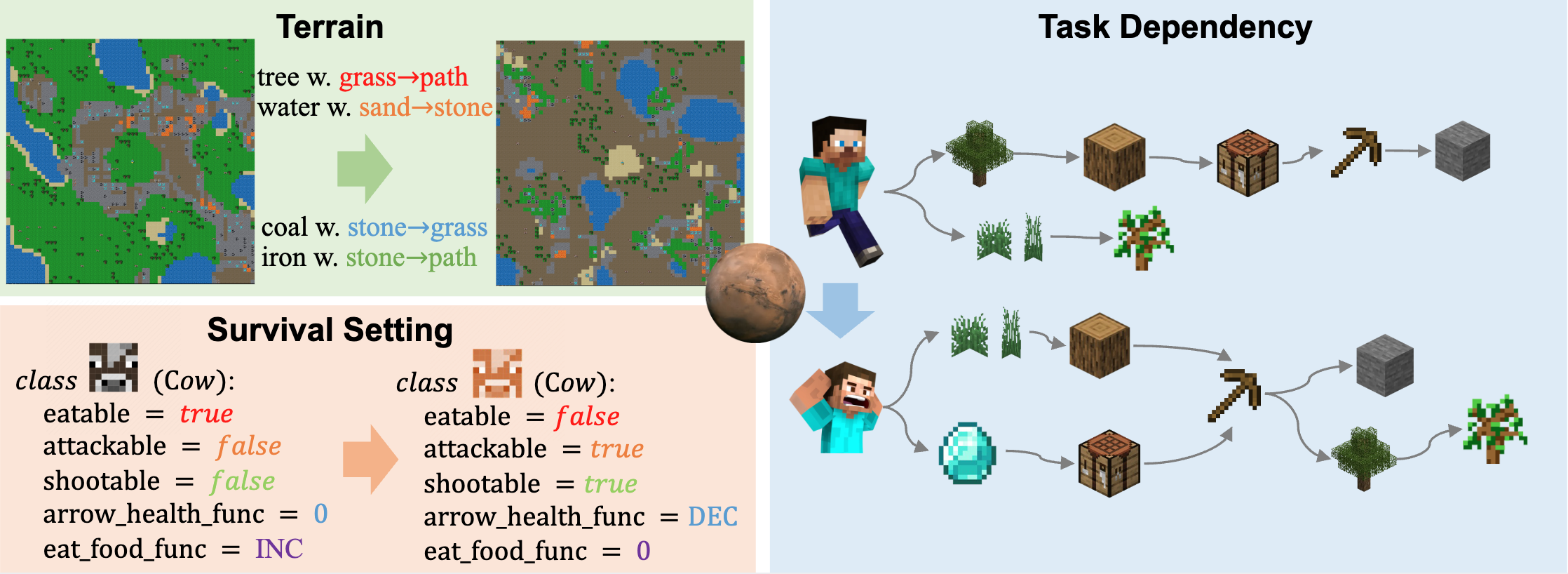

World types: Default: original Crafter setting, i.e., no modifications. Terrain, Survival and Task Dependency: three types of modifications. Terr. Surv., Terr. Task., Surv. Task., and All Three.: modifications combining two or all three types.

🚨 To submit your results to the leaderboard, please send to this email with your result json files.